LiveSnippets: Voice-based Live Authoring of Multimedia Articles about Experiences

Abstract

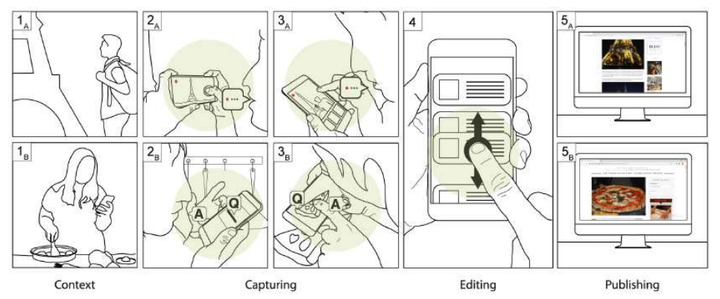

We transform traditional experience writing into in-situ voicebased multimedia authoring. Documenting experiences digitally in blogs and journals is a common activity that allows people to socially connect with others by sharing their experiences (e.g travelogue). However, documenting such experiences can be timeconsuming and cognitively demanding as it is typically done OUTOF-CONTEXT (after the actual experience). We propose in-situ voice-based multimedia authoring (IVA), an alternative workflow to allow IN-CONTEXT experience documentation. Unlike the traditional approach, IVA encourages in-context content creations using voice-based multimedia input and stores them in multi-modal “snippets”. The snippets can be rearranged to form multimedia articles and can be published with light copy-editing. To improve the output quality from impromptu speech, Q&A scaffolding was introduced to guide the content creation. We implement the IVA workflow in an android application, LiveSnippets - and qualitatively evaluate it under three scenarios (travel writing, recipe creation, product review). Results demonstrated that IVA can effectively lower the barrier of writing with acceptable trade-offs in multitasking.